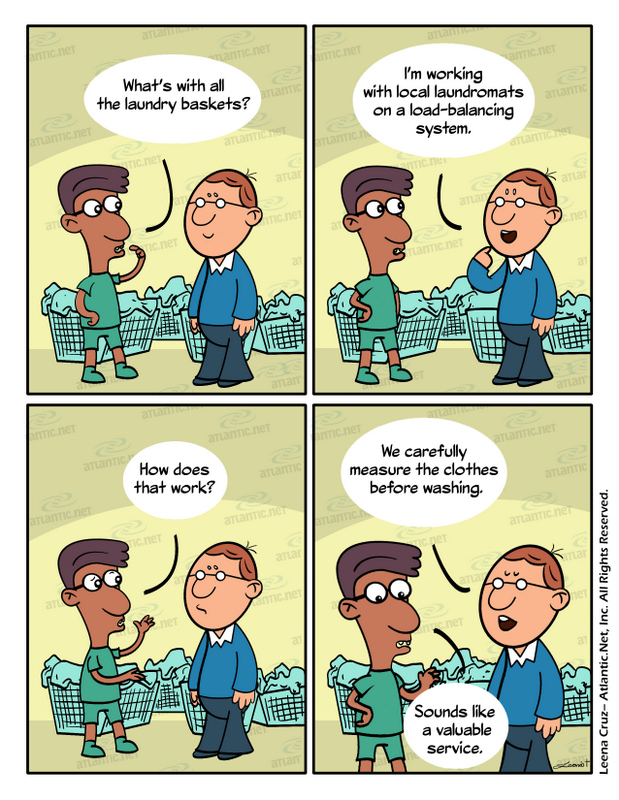

How Does a Load Balancer Work? What Is Load Balancing?

January 30, 2014 by Sam Guiliano ( 53 ) under VPS Hosting

0 Comments

Cloud structure that is built fundamentally to optimize the balance of loads on the individual devices that make up the cloud network. Load balancing methods have expanded and increased in sophistication tremendously since the advent of cloud computing. However, this piece is more of an introduction, so we will focus on the basic idea of load balancing and extrapolation into three sample types: perceptive, fastest response time, and weighted round-robin.

Finally, we will get into a basic explanation of the primary load balancing challenge in a cloud environment: heterogeneity.

What is Load Balancing?

Load balancing allows you to easily balance the amount of work performed on several different pieces of equipment or sections of hardware. Typically loads are balanced across many different servers or – within a single VPS – across its hard drives and CPUs. If you use more than one device or piece of software to accomplish load-balancing, that backup equipment will make your system more reliable via redundancy.

Probably the most common way in which load-balancing is understood is to meaningfully separate the incoming requirements of Internet protocol (IP) visitors accessing a website or application. Load-balancing was invented and refined for various reasons: better performance and speed of each device, making under-utilization (failure to take advantage of the resources on each machine) less of a problem, and keeping individual machines from hitting their threshold and potentially starting to drop requests.

Load-balancing is critical for a busy site or network when it’s difficult to know the amount of traffic accessing the servers. (You can see how this process relates to cloud computing and its focus on the wide distribution of resources and work.)

How Does a Load Balancer Work?

Usually, the structure of load-balancing involves multiple web servers. If one of them gets overstressed with too much of the overall load, the requests move to another machine to be fulfilled. You can see how this decreases latency – processing lag time – because you’re essentially turning a bunch of disparate servers into one big balanced whole, like a super server (but perhaps not quite as heroic). The load balancer determines which servers have the most capacity to handle incoming requests.

The request for data comes in from the end-user to the router. (In some situations, the router itself functions as the load balancer; however, the router is not enough for a high-volume organization.) The request is sent from the router to the load balancer. The load balancer then forwards it to whichever device is most likely to fulfill the request fastest. That machine (server) sends the information to the load balancer, which is then transferred by the router back to the end-user.

Another major function of load balancing is that it allows continued operations even in the event of problems which would otherwise be interruptions: routine maintenance or failures (note, however, that you do have the option of 100% uptime). If you have several servers powering your site or application and one of them breaks, your end-users will not know that has happened because they won’t experience a problem. Your backend solves the problem by sending the request out to another machine in your server farm.

Global Server Load Balancing

One type of broader load-balancing is called Global Server Load Balancing, or GSLB. With this strategy, the work is sent out to server farms in various regions of the world. Again, we see major similarities to the core concepts of cloud computing.

Three Load Balancing Strategies

Three types of load-balancing are Perceptive, Fastest Response Time, and Weighted Round Robin. The first method takes data from the past and the present to determine which device is the most likely to be available in the given situation. Obviously, “Perception” only works well if the algorithm is extremely sophisticated, and it’s always possible to run into problems because there’ll always be exceptions to the rule.

Fastest Response Time is a real-time strategy. The load balancer basically pings each server to determine which one is performing at the highest level. It’s sort of like asking for a volunteer to handle the work.

The Weighted Round Robin approach allows one server after another to perform the work, but it also “weights” each device according to its power. Again, you can see how the concept of weighting applies to cloud computing.

Load balancing: the special issue of cloud computing

Load-balancing in a homogeneous environment is relatively simple because everything is standardized. The atmosphere is controlled, and the math is simple. The nature of heterogeneous architecture, which is typical of a cloud, makes load-balancing much more complicated. It gets more complicated because of the discrepancy between different devices: how healthy they are, how much capacity they have, and where they are located.

Success with load balancing, whether you are using reliable VPS hosting or not, is all about having a strong team of experts on your side, such as the certified engineers at Atlantic.Net. Contact us today for a consultation. For more information on load balancing, check out our article What is Server Load Balancing? The Function of a Load Balancer.

Comic words by Kent Roberts and art by Leena Cruz.

Get a $250 Credit and Access to Our Free Tier!

Free Tier includes:

G3.2GB Cloud VPS a Free to Use for One Year

50 GB of Block Storage Free to Use for One Year

50 GB of Snapshots Free to Use for One Year