Large Language Models (LLMs) like Phi-3 are changing the way we interact with AI. Instead of relying on cloud services like OpenAI or Google, you can now run these powerful models locally on your own GPU server. This gives you more control over privacy, performance, and cost.

In this tutorial, you’ll learn how to deploy LLMs like Phi-3 on an Ubuntu 24.04 GPU server using a tool called Ollama. Ollama simplifies model management and inference with a local API server. On top of that, we’ll build a lightweight Flask-based Web UI so you can chat with the model through your browser—just like ChatGPT, but running entirely on your hardware.

Prerequisites

- An Ubuntu 24.04 server with an NVIDIA GPU.

- A non-root user or a user with sudo privileges.

- NVIDIA drivers are installed on your server.

Step 1: Install Ollama

Ollama is a lightweight model runtime that makes it easy to run large language models like Phi-3 locally. It manages downloading, caching, and serving models through a built-in API. Installing it on Ubuntu 24.04 is straightforward.

1. Ollama provides a shell script that installs everything for you. Run the following command in your terminal.

curl -fsSL https://ollama.com/install.sh | shYou’ll see output like this:

>>> Installing ollama to /usr/local

>>> Downloading Linux amd64 bundle

######################################################################## 100.0%

>>> Creating ollama user...

>>> Adding ollama user to render group...

>>> Adding ollama user to video group...

>>> Adding current user to ollama group...

>>> Creating ollama systemd service...

>>> Enabling and starting ollama service...

Created symlink /etc/systemd/system/default.target.wants/ollama.service → /etc/systemd/system/ollama.service.

>>> NVIDIA GPU installed.2. Reload the environment.

source ~/.bashrc3. Start and enable the Ollama service.

systemctl enable ollama

systemctl start ollama4. Verify that Ollama is running.

curl http://localhost:11434Output.

Ollama is runningStep 2: Run a Phi-3 Model with Ollama

Now that the Ollama backend is up and running, it’s time to load and run the Phi-3 model. This model is designed to be compact and fast, making it ideal for local deployments on GPU-enabled servers.

1. Run the Phi-3 model using Ollama.

ollama run phi3This will download the Phi-3 model if it’s not already cached locally, start an interactive session in the terminal, and prompt you to enter text, which Phi-3 will respond to:

>>> What is Ollama?

Ollama seems to be a term that doesn't correspond with well-known technology or concepts as of my last update in April 2023. It might either refer to an emerging

tool, application, system, project, acronym, organization, or even fictional element not recognized within the industry up until then.2. Press Ctrl+D to exit the session.

Step 3: Use Ollama Programmatically with cURL

While the terminal is great for quick testing, Ollama really shines when used programmatically through its local REST API. This allows you to build custom tools, integrate with apps, or even test prompt responses with curl.

Send a prompt to the Ollama backend running Phi-3.

curl http://localhost:11434/api/generate -d '{

"model": "phi3",

"prompt": "Explain what is GPU? Answer in short paragraph",

"stream": false

}'You should get a JSON response like:

{"model":"phi3","created_at":"2025-07-30T14:44:34.276761484Z","response":"A Graphics Processing Unit, or GPU, is a specialized electronic circuit designed to accelerate the creation of computer graphics and facilitate complex calculations for scientific research. Traditionally used solethy primarily by professional graphic designers and video game developers as it excels at processing large blocks of data simultaneously which allows rendering high-quality images with great speed; modern GPUs have evolved into multipurpose processors that can run a wide array of applications including machine learning, cryptocurrency mining, media encoding/decoding, scientific simulations. They are typically found on personal computers as graphics cards or in mobile devices such as smartphones and tablets where computational efficiency is paramount for performance but their use extends far beyond just rendering visuals - they can perform general-purpose calculations quickly due to a parallel processing architecture that's significantly more efficient at handling the matrix and vector operations commonly found in today’s computing tasks.","done":true,"done_reason":"stop","context":[32010,29871,13,9544,7420,825,338,22796,29973,673,297,3273,14880,32007,29871,13,32001,29871,13,29909,29247,10554,292,13223,29892,470,22796,29892,338,263,4266,1891,27758,11369,8688,304,15592,403,278,11265,310,6601,18533,322,16089,10388,4280,17203,363,16021,5925,29889,18375,17658,1304,14419,21155,19434,491,10257,3983,293,2874,414,322,4863,3748,18777,408,372,5566,1379,472,9068,2919,10930,310,848,21699,607,6511,15061,1880,29899,29567,4558,411,2107,6210,29936,5400,22796,29879,505,15220,1490,964,6674,332,4220,1889,943,393,508,1065,263,9377,1409,310,8324,3704,4933,6509,29892,24941,542,10880,1375,292,29892,5745,8025,29914,7099,3689,29892,16021,23876,29889,2688,526,12234,1476,373,7333,23226,408,18533,15889,470,297,10426,9224,1316,408,15040,561,2873,322,1591,1372,988,26845,19201,338,1828,792,363,4180,541,1009,671,4988,2215,8724,925,15061,7604,29879,448,896,508,2189,2498,29899,15503,4220,17203,9098,2861,304,263,8943,9068,11258,393,29915,29879,16951,901,8543,472,11415,278,4636,322,4608,6931,15574,1476,297,9826,30010,29879,20602,9595,29889],"total_duration":5109776741,"load_duration":6017051,"prompt_eval_count":19,"prompt_eval_duration":20751507,"eval_count":189,"eval_duration":5082086586}Step 4: Create a Flask WebUI for Chat Interface

To make interacting with Phi-3 more intuitive, let’s build a simple web interface using Flask. This WebUI will let you send prompts and display model responses, just like a local ChatGPT!

1. Set up a Python virtual environment.

python3 -m venv ollama-env

source ollama-env/bin/activate2. Install Flask and other necessary dependencies.

pip install flask flask-cors requests3. Create a Flask application.

nano app.pyAdd the following code.

from flask import Flask, request, jsonify, render_template

import requests

app = Flask(__name__)

OLLAMA_API = "http://localhost:11434/api/generate"

@app.route("/")

def home():

return render_template("index.html")

@app.route("/chat", methods=["POST"])

def chat():

user_input = request.json.get("prompt")

response = requests.post(OLLAMA_API, json={

"model": "phi3",

"prompt": user_input,

"stream": False

})

return jsonify(response.json())

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5000)4. Make a folder called templates, and create index.html inside it.

mkdir templates

nano templates/index.htmlAdd the following code.

<!DOCTYPE html>

<html>

<head>

<title>Phi-3 Chatbot</title>

</head>

<body>

<h1>Chat with Phi-3</h1>

<textarea id="input" rows="4" cols="50"></textarea><br>

<button onclick="send()">Send</button>

<pre id="output"></pre>

<script>

async function send() {

const prompt = document.getElementById("input").value;

const response = await fetch("/chat", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({ prompt })

});

const data = await response.json();

document.getElementById("output").textContent = data.response;

}

</script>

</body>

</html>5. Start the Flask app.

python3 app.py6. Open your browser and visit the URL http://your-server-ip:5000.

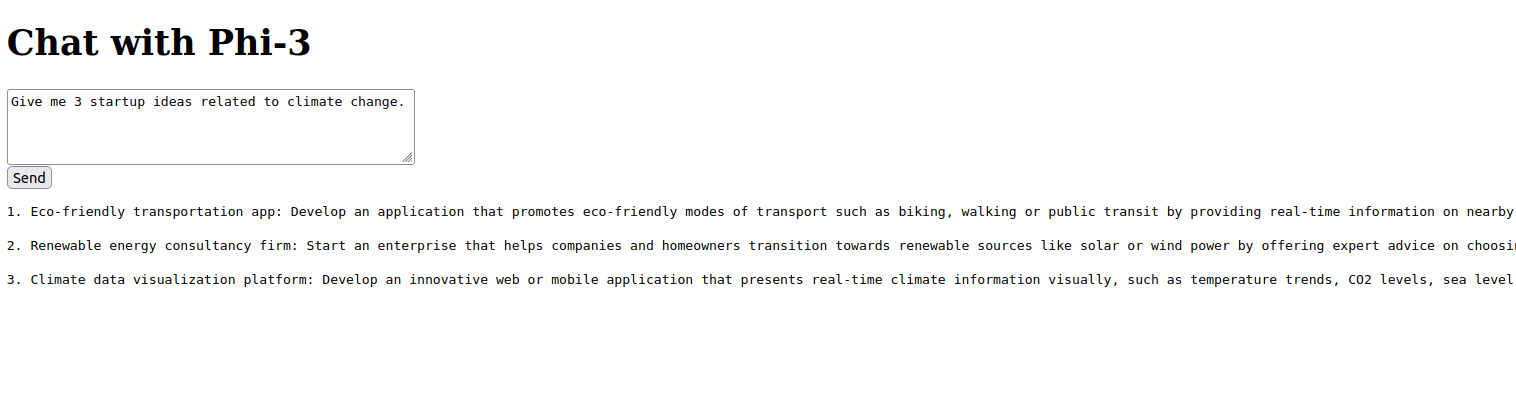

7. Type your prompt, like: “Give me 3 startup ideas related to climate change.”

8. Click Send, and the model will reply below the box!

Conclusion

Running LLMs like Phi-3 locally using Ollama on Ubuntu 24.04 gives you full control over AI inference—no API keys, no rate limits, no privacy trade-offs. With just a few commands, you installed Ollama, ran the Phi-3 model, and built a working Flask-based web interface for real-time chat.