K3s is a lightweight Kubernetes distribution designed for simplicity, scalability, and fast deployments. It is perfect for running Kubernetes clusters on resource-constrained environments or edge devices. When combined with the NVIDIA GPU Operator, it unlocks the full potential of GPUs in containerized workloads, allowing developers to run GPU-accelerated applications efficiently.

This guide provides a step-by-step tutorial on installing K3s and configuring it with NVIDIA GPU Operator on Ubuntu 22.04.

Prerequisites

- An Ubuntu 22.04 GPU Server

- At least 8GB of RAM and 20GB of free disk space (for GPU-accelerated workloads).

- A root or sudo privileges

Step 1: Verify GPU Availability

Ensuring that your NVIDIA GPU is detected and operational is a critical first step. This guarantees compatibility with the NVIDIA Container Toolkit and GPU Operator.

nvidia-smiOutput.

Mon Nov 25 04:45:16 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.90.07 Driver Version: 550.90.07 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA A40-2Q On | 00000000:06:00.0 Off | 0 |

| N/A N/A P0 N/A / N/A | 1MiB / 2048MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+If no GPU is detected, ensure the GPU is properly installed and powered.

Step 2: Disable Swap

Kubernetes requires swap to be disabled to manage resources efficiently. Swap conflicts with Kubernetes’ resource scheduling and can lead to unpredictable behavior.

swapoff -aTo disable swap permanently, edit the /etc/fstab file and comment out the swap entry.

Disabling swap ensures that Kubernetes nodes use actual memory for resource allocation. This improves stability and avoids node taints.

Step 3: Install K3s

K3s is a lightweight Kubernetes distribution, designed for edge devices and development environments. Its streamlined architecture eliminates unnecessary components, making it faster and easier to deploy than traditional Kubernetes.

Run the following command to download and execute the K3s installation script.

curl -sfL https://get.k3s.io | sh -This command installs containerd as the default container runtime and configures essential services.

Check the status of the K3s service:

systemctl status k3sOutput.

● k3s.service - Lightweight Kubernetes

Loaded: loaded (/etc/systemd/system/k3s.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2024-11-25 04:46:26 UTC; 33s ago

Docs: https://k3s.io

Process: 9098 ExecStartPre=/bin/sh -xc ! /usr/bin/systemctl is-enabled --quiet nm-cloud-setup.service 2>/dev/null (code=exited, status=0/SUCCESS)

Process: 9100 ExecStartPre=/sbin/modprobe br_netfilter (code=exited, status=0/SUCCESS)

Process: 9101 ExecStartPre=/sbin/modprobe overlay (code=exited, status=0/SUCCESS)

Main PID: 9102 (k3s-server)

Tasks: 86

Memory: 1.3G

CPU: 21.304s

CGroup: /system.slice/k3s.service

├─ 9102 "/usr/local/bin/k3s server"Export the KUBECONFIG environment variable to access the K3s cluster.

export KUBECONFIG=/etc/rancher/k3s/k3s.yamlList the nodes in your K3s cluster.

kubectl get nodesOutput.

NAME STATUS ROLES AGE VERSION

ubuntu Ready control-plane,master 83s v1.30.6+k3s1Step 4: Install NVIDIA Container Toolkit

The NVIDIA Container Toolkit allows containerized applications to access GPU resources. It is a critical component for running GPU workloads in Kubernetes.

Add the NVIDIA repository.

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg && curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | tee /etc/apt/sources.list.d/nvidia-container-toolkit.listUpdate the repository index.

apt-get updateInstall the container toolkit.

apt-get install -y nvidia-container-toolkitCheck the installed version of the NVIDIA Container CLI:

nvidia-container-cli --versionOutput.

cli-version: 1.17.2

lib-version: 1.17.2Configure Docker for GPU support.

nvidia-ctk runtime configure --runtime=dockerRestart Docker to apply the configuration:

systemctl restart dockerRun a container with GPU access to verify the setup:

docker run --rm --gpus all nvidia/cuda:12.6.2-cudnn-runtime-ubuntu22.04 nvidia-smiOutput.

==========

== CUDA ==

==========

CUDA Version 12.6.2

Container image Copyright (c) 2016-2023, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

This container image and its contents are governed by the NVIDIA Deep Learning Container License.

By pulling and using the container, you accept the terms and conditions of this license:

https://developer.nvidia.com/ngc/nvidia-deep-learning-container-license

A copy of this license is made available in this container at /NGC-DL-CONTAINER-LICENSE for your convenience.

Mon Nov 25 04:57:39 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.90.07 Driver Version: 550.90.07 CUDA Version: 12.6 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA A40-2Q On | 00000000:06:00.0 Off | 0 |

| N/A N/A P0 N/A / N/A | 1MiB / 2048MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+Step 5: Install Helm

Helm simplifies Kubernetes deployments by managing application packaging and upgrades.

Install Helm using the script below.

curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bashVerify the Helm installation.

helm versionOutput.

version.BuildInfo{Version:"v3.16.3", GitCommit:"cfd07493f46efc9debd9cc1b02a0961186df7fdf", GitTreeState:"clean", GoVersion:"go1.22.7"}Step 6: Deploy NVIDIA GPU Operator

The NVIDIA GPU Operator automates the management of NVIDIA GPU drivers, monitoring tools, and runtime in Kubernetes.

Add the NVIDIA Helm repository.

helm repo add nvidia https://nvidia.github.io/gpu-operatorUpdate the repository.

helm repo updateInstall GPU operator.

helm install --wait --generate-name nvidia/gpu-operatorVerify the deployment.

kubectl get pods | grep nvidiaThis command will list all NVIDIA-related pods in the cluster:

Please note: it may take some time for the pods to start

nvidia-container-toolkit-daemonset-cl2s5 1/1 Running 0 2m36s

nvidia-cuda-validator-r6pl4 0/1 Evicted 0 2m26s

nvidia-dcgm-exporter-bt8jp 1/1 Running 0 2m35s

nvidia-device-plugin-daemonset-gtgwv 1/1 Running 0 2m35s

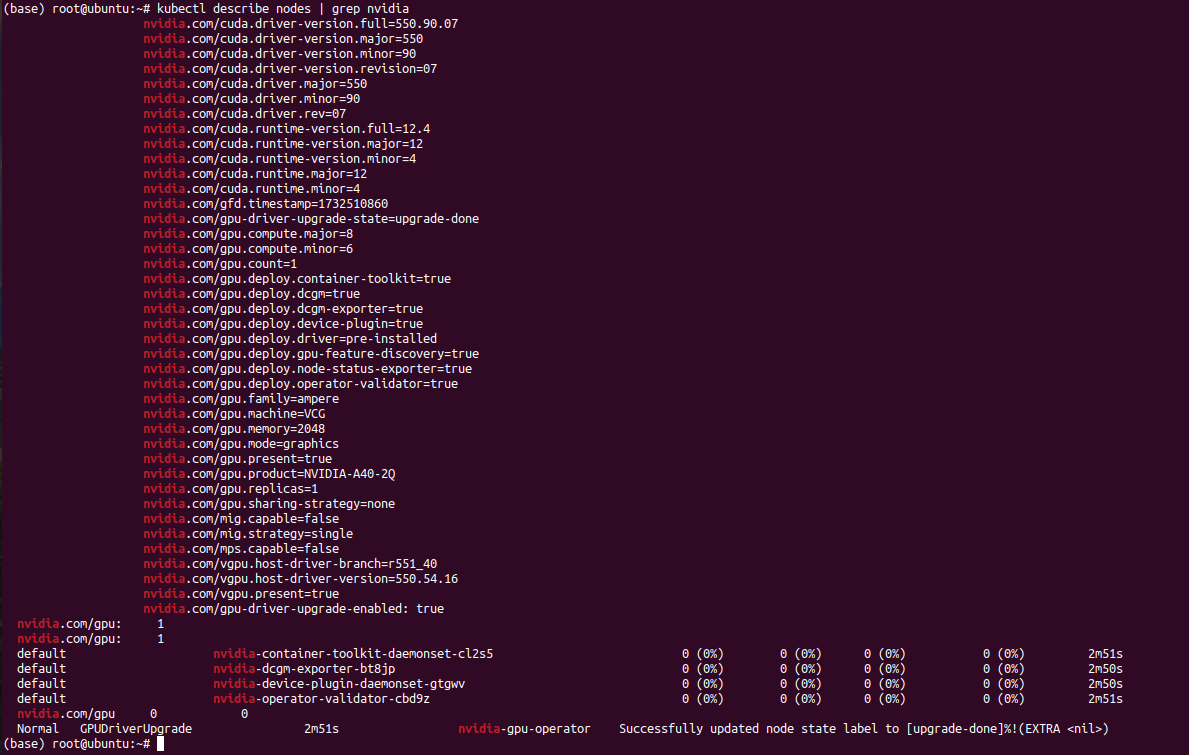

nvidia-operator-validator-cbd9z 0/1 Init:2/4 0 2m36sEnsure that the NVIDIA GPU Operator has configured the nodes correctly.

kubectl describe nodes | grep nvidiaYou should see the information about the GPU resources managed by the NVIDIA GPU Operator.

Conclusion

You’ve successfully installed K3s with the NVIDIA GPU Operator on Ubuntu 22.04. This setup allows you to run GPU-accelerated workloads on a lightweight Kubernetes cluster, enabling efficient deployment of machine learning, AI, and other compute-intensive applications. Try to deploy K3s on Atlantic.Net GPU Hosting.