Table of Contents

- What Is Infrastructure as a Service (IaaS)?

- IaaS Architecture

- Multi-Tenant Architecture in the Cloud

- What Is Cloud Hosting?

- What Is a Bare-Metal Cloud?

- IaaS vs PaaS vs SaaS

- Public Cloud vs. Private Cloud vs. Hybrid Cloud vs. Multi Cloud

- Top Cloud Computing Providers

- IaaS Pricing: AWS vs Azure vs Google Cloud

- What Is IoT in the Cloud?

- IaaS High Availability

- Adopting IaaS: Cloud Migration Strategies

- AWS Migration Best Practices

- Azure Migration Best Practices

- Google Cloud Migration Best Practices

- Running Mission Critical Applications in the Cloud

- Deep Learning in the Cloud

- Kubernetes in the Cloud

- See Additional Guides on IaaS Topics

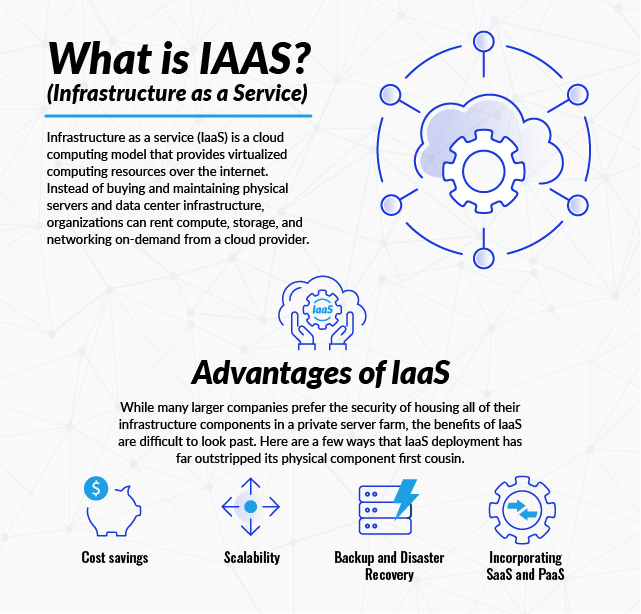

What Is Infrastructure as a Service (IaaS)?

Infrastructure as a service (IaaS) is a cloud computing model that provides virtualized computing resources over the internet. Instead of buying and maintaining physical servers and data center infrastructure, organizations can rent compute, storage, and networking on-demand from a cloud provider. This model gives businesses the flexibility to scale up or down based on workload requirements while paying only for what they use.

IaaS supports a range of use cases from hosting websites to running large-scale enterprise applications. Users have control over operating systems, deployed applications, and configurations, while the cloud provider manages the underlying physical infrastructure. This makes IaaS ideal for organizations that need computing power and flexibility without the burden of managing hardware.

IaaS Architecture

In the IaaS model, cloud providers host infrastructure like servers, storage, networking hardware, and hypervisors, meaning organizations do not need to have this requirement in their on-premise data center.

IaaS providers offer a variety of services over this infrastructure. These include:

- Multi tenancy and billing management

- Logging and monitoring

- Security

- Clustering, failover and load balancing

- Backup, replication and recovery

Most IaaS providers offer policy-driven services, allowing users to implement a high level of automation and coordinate critical infrastructure tasks. For example, users can implement policies that automate load balancing to maintain application performance and availability.

IaaS customers can access resources and services over a wide area network (WAN) such as the Internet, and instruct the cloud provider to deploy a complete application stack.

For example, a user can connect to the IaaS platform remotely to create virtual machines (VMs). They can install an operating system and an enterprise application on each virtual machine, deploy local disk storage, large-scale object storage, and database systems. The user can then use the provider’s services for cost tracking, performance monitoring, network traffic balancing, disaster recovery management, and more.

Most IaaS users consume cloud services via a cloud provider, such as Amazon Web Services or Microsoft Azure . This is known as public cloud computing. Organizations can also set up their own IaaS infrastructure, creating what is known as a private cloud, or in combination with public cloud resources, a hybrid cloud.

Learn more in the in-depth guides to Load Balancers

Related product offering: Radware Alteon | Application Delivery and Security

Multi-Tenant Architecture in the Cloud

Multi-Tenant Architecture in the Cloud Multi-tenancy makes it possible for one software application or infrastructure component to serve multiple customers. Customers are referred to as “tenants”. Each tenant might have the ability to customize the infrastructure or service they receive, but they usually do not have full access to its configuration or source code.

A multi-tenant architecture runs applications or infrastructure in a shared environment. Every tenant is logically separated, while working on resources that are physically integrated with those of other tenants. This means that a multi-tenant component shares one instance of configurations, user management, and data.

Most cloud environments are based on the multi-tenancy model. Both public clouds and private clouds use multi-tenancy, allowing multiple users or groups (whether from different organizations or the same organization) to run workloads separately. For example, in a multi-tenant public cloud environment, multiple organizations or users can run workloads on the same physical server. However, each user only sees their own workloads, a concept known as isolation.

Learn more in the in-depth guide to multi-tenant architecture

What Is Cloud Hosting?

Cloud hosting is a paradigm that allows organizations to leverage cloud computing without the full complexity of public cloud or private cloud deployments.

Cloud hosting is a more flexible, scalable, and reliable alternative to traditional hosting methods like shared hosting and dedicated hosting. Unlike these traditional methods, where an organization’s resources run on a single server in a provider’s data center, cloud hosting leverages large-scale cloud computing environments, enabling easier scalability and redundancy.

Here are the primary types of cloud hosting:

- Managed cloud hosting: In managed cloud hosting, the service provider takes care of the setup, administration, management, and support of the cloud servers, freeing up the business to focus on its core competencies.

- Cloud VPS (Virtual Private Server): This is a type of cloud hosting where the user has an allocated amount of server resources. Unlike shared hosting, resources aren’t shared with other users. This type of hosting is scalable and can be adjusted as needed.

- Dedicated server hosting: This type of service allows organizations to use a dedicated server hosted within a cloud platform.

Learn more in our detailed guide to what is cloud hosting

Related technology updates:

Learn more in our detailed guide to dedicated server hosting

Related product offering: VPS hosting by Atlantic.net

What Is a Bare-Metal Cloud?

A bare-metal cloud is a type of cloud service where the client leases physical servers from a cloud service provider. Unlike traditional cloud models where multiple users share resources of the same physical server, the bare-metal cloud provides dedicated servers to a single client. This eliminates the “noisy neighbor” problem often encountered in shared hosting environments.

The term “bare-metal” refers to the direct access granted to the underlying physical servers.

This means that users are not limited to the resources provided by the hypervisor or virtualization software, but they can access the full capacity of the physical servers. This includes the server’s processor power, memory, storage, and networking resources.

Bare-metal cloud combines the best features of traditional dedicated server hosting with the flexibility and scalability of cloud hosting. You get the raw performance of physical servers and the ability to scale up or down as your needs change. And unlike traditional dedicated servers, you can provision and deprovision servers in a matter of minutes, not days or weeks.

Related product offering: bare metal servers by Atlantic.net

IaaS vs PaaS vs SaaS

What is Platform as a Service (PaaS)?

Platform as a Service (PaaS) provides some infrastructure components, along with additional managed service and software. A PaaS is a framework developers can use to create their own applications, focusing on developing software functionality for their end users. The cloud provider manages complex back-end infrastructure, including computing resources, operating systems, software updates, storage, networking, and integrations.

What is Software as a Service (SaaS)?

Software as a service (SaaS) is a popular choice for cloud users. Because SaaS delivers software to end users over the Internet, most SaaS applications run directly from a web browser and do not need to be downloaded or installed by the customer. PaaS, on the other hand, delivers a software development platform. The majority of cloud consumers do not need PaaS.

This web services model eliminates the need for IT staff to download and install applications on local devices. SaaS enables providers to simplify service and support for their business, while solving potential technical problems such as data and storage management, middleware, servers, and networking.

Key Differences Between IaaS, PaaS and SaaS

- PaaS is based on the IaaS model—this is because, in addition to infrastructure components, the provider manages the operating systems, middleware, and other operating environments for cloud users. PaaS workloads simplify deployment but offer more limited flexibility compared to a pure IaaS model.

- SaaS manages all infrastructure and applications needed for the end user—SaaS users don’t need to install or deploy anything. They can typically just login and start using the provider’s application, running on the provider’s infrastructure. Users can customize the behavior of applications, add their own data, and restrict access. Almost all other aspects are the responsibility of the SaaS provider.

Public Cloud vs. Private Cloud vs. Hybrid Cloud vs. Multi Cloud

There are three primary types of cloud computing environments: public, private, and hybrid clouds:

- Public cloud is the most common form of cloud computing. In this model, service providers offer resources, such as servers and storage, over the Internet. Public cloud services are generally affordable and highly scalable, but they may lack the security and control desired by some businesses.

- Private cloud is a cloud service that is not shared with any other organization. The servers and storage are dedicated to a single business, providing greater control and security. However, private clouds are more expensive and require more management than public clouds.

- Hybrid cloud is a blend of public and private clouds. It allows businesses to keep sensitive information in a private cloud while using the public cloud for non-sensitive data and applications. Hybrid clouds offer the best of both worlds, combining the security of private clouds with the cost-effectiveness and scalability of public clouds.

- Multi cloud refers to the use of multiple cloud services from different providers. This approach allows businesses to avoid vendor lock-in, optimize costs, and leverage the unique strengths of each cloud provider. Multi cloud strategies enable greater flexibility and resilience, as workloads can be distributed across various platforms to meet specific requirements and ensure continuity.

Learn more in the detailed guides to:

Related technology updates: [Report] Optimizing in a Multi-Cloud World

Examples of IaaS Use Cases

IaaS enables organizations to access scalable, on-demand infrastructure without maintaining physical hardware. This model is suited for a wide range of use cases across industries and technical needs.

- Test and Development – Quickly provision environments for application development and testing, supporting agile workflows and CI/CD pipelines.

- Web Applications – Host web apps with dynamic scaling and built-in load balancing, minimizing upfront infrastructure investment.

- Cloud hosting – Distributes website or application data across multiple virtual servers, offering scalability, high availability, and flexibility without physical server management. It supports a wide variety of workloads and operational needs. Learn about Atlantic.net cloud hosting solutions.

- Dedicated hosting – Provides organizations with exclusive use of physical servers, delivering consistent performance, control, and security for high-demand or regulated workloads. Learn about Atlantic.net dedicated server hosting.

- Storage, Backup, and Recovery – Leverage elastic storage and automated backup solutions without complex on-prem management. Learn more in the detailed guide to cloud storage

- High-Performance Computing (HPC) – Run simulations, financial models, or scientific computations using scalable compute clusters available on demand.

- Big Data Analytics – Utilize distributed storage and compute resources for real-time analytics, machine learning, and large-scale data processing.

- Learning Management Systems (LMS) – Deploy scalable back-ends for LMS platforms that require high uptime and adaptable performance, supporting e-learning for organizations, educational institutions, or corporate training platforms. Learn more in the detailed guide to Learning Management Systems.

- Digital Asset Management (DAM) – Store and serve media assets globally with high availability and integrated security controls. Learn more in the detailed guide to Digital Asset Management

- Infrastructure as Code (IaC) – Automate infrastructure provisioning and management through code, using tools like Terraform or AWS CloudFormation. IaaS provides the underlying resources that IaC tools configure and orchestrate, enabling consistent, repeatable deployments.

Learn more in the detailed guide to Infrastructure as Code

Related product offering: GitOps Software Delivery Platform by Codefresh

Related technology update: [Blog] How to Model Your GitOps Environments and Promote Releases between Them

What Is CloudOps?

CloudOps is the combination of continuous operations and continuous delivery in the cloud. It is about operating your infrastructure and applications in a way that is optimized, efficient, reliable, secure, and cost-effective.

In the context of IaaS, CloudOps involves managing and maintaining the infrastructure elements provided by the IaaS provider. This could include server instances, storage, and networking components. The goal is to ensure that these resources are optimally configured, secure, and that they are delivering value to the business.

CloudOps also involves monitoring and managing the performance and availability of these resources. This includes setting and managing service levels, as well as identifying and resolving any issues that may arise.

Learn more in our detailed guide to CloudOps

Related product offering: NetApp Spot | Cloud Optimization Solutions

Related technology update: [Report] 2023 State of CloudOps

Learn more in our detailed guide to: Cloud Optimization

Related technology update: [Report] GigaOm 2024 Radar for Cloud Resource Optimization

Learn more in our detailed guide to: Cloud Cost Optimization

What Is FinOps?

FinOps, short for Financial Operations, is a discipline that combines financial management, operations, and cloud engineering to optimize cloud spending and maximize business value. It focuses on gaining financial control and accountability over cloud resources, ensuring that cloud investments are made efficiently and aligned with business objectives.

FinOps involves several key practices:

- Visibility: Ensuring that cloud costs are transparent and understandable. This includes detailed cost reporting and usage analytics to track where and how resources are being consumed.

- Optimization: Identifying areas where cloud spending can be reduced without compromising performance or reliability. This includes rightsizing instances, leveraging reserved instances, and eliminating unused resources.

- Governance: Implementing policies and controls to manage cloud spending. This involves setting budgets, defining cost allocation rules, and establishing spending limits to prevent cost overruns.

- Collaboration: Facilitating communication and cooperation between finance, operations, and engineering teams. This ensures that financial considerations are integrated into the cloud management process and that all stakeholders are aligned on cost management goals.

By integrating FinOps into cloud operations, organizations can better manage their cloud costs, enhance financial predictability, and ensure that their cloud investments support overall business strategy.

Learn more in the detailed guide to FinOps

FinOps on AWS

FinOps on AWS involves leveraging various tools and practices provided by Amazon Web Services to manage and optimize cloud costs effectively. Key components include:

- AWS Cost Explorer: This tool provides detailed insights into your AWS spending and usage. It helps in analyzing cost trends, identifying cost drivers, and exploring saving opportunities. With Cost Explorer, you can create custom reports and visualize data to understand spending patterns better.

- AWS Budgets: AWS Budgets allows you to set custom cost and usage budgets. You can receive alerts when usage or costs exceed the predefined thresholds, enabling proactive management of cloud expenses. This tool also integrates with AWS Cost Explorer for detailed budget analysis.

- AWS Trusted Advisor: Trusted Advisor offers real-time recommendations to optimize your AWS environment. It provides insights on cost optimization, security, fault tolerance, and performance improvements. The cost optimization checks include recommendations for unused or idle resources, reserved instance usage, and opportunities for savings plans.

- AWS Savings Plans and Reserved Instances: These purchasing options allow you to commit to using a specific amount of compute power or instances over a one- or three-year period, in exchange for significant discounts compared to on-demand pricing. Utilizing these plans effectively can lead to substantial cost savings.

- Cost Allocation Tags: Implementing cost allocation tags helps in categorizing and tracking AWS resources. By tagging resources with meaningful labels, organizations can allocate costs to specific projects, departments, or teams, enabling more granular cost management and accountability.

Learn more in the detailed guide to AWS cost optimization

Related product offering: Finout | Enterprise-Grade FinOps Platform

Related technology update: [Webinar] How To Create a Cost-Effective AWS Environment

FinOps on Azure

FinOps on Azure focuses on using Microsoft’s suite of tools and services to optimize cloud spending and improve financial management. Key practices include:

- Azure Cost Management and Billing: This service provides comprehensive insights into your Azure spending. It includes cost analysis, budgeting, and forecasting tools that help in tracking and managing cloud expenses. You can set budgets and alerts to monitor spending and ensure it stays within the allocated limits.

- Azure Advisor: Azure Advisor provides personalized recommendations to optimize your Azure resources for cost, security, performance, and availability. The cost recommendations include advice on rightsizing or shutting down underutilized resources and taking advantage of reserved instances or savings plans.

- Azure Reservations and Spot VMs: Azure Reservations allow you to commit to one- or three-year plans for significant cost savings on compute resources. Spot VMs offer unused Azure capacity at discounted rates, ideal for non-critical or flexible workloads.

- Azure Cost Allocation and Tagging: By implementing a robust tagging strategy, organizations can allocate costs accurately to different projects, departments, or business units. Tags facilitate detailed cost reporting and accountability, making it easier to track and manage expenses.

- Azure Hybrid Benefit: This feature allows you to use your existing on-premises Windows Server and SQL Server licenses with Software Assurance to save on Azure costs. It provides substantial savings for workloads that can leverage this benefit.

FinOps on Google Cloud

FinOps on Google Cloud involves utilizing Google’s tools and practices to manage cloud costs effectively. Key components include:

- Google Cloud Cost Management: This suite of tools includes detailed cost reporting, budget tracking, and cost forecasting. It allows you to monitor spending, analyze cost trends, and set budget alerts to prevent unexpected expenses.

- Google Cloud Pricing Calculator: This tool helps in estimating the cost of Google Cloud services based on your specific usage patterns. It provides a detailed breakdown of costs, enabling you to plan and optimize your cloud spending.

- Committed Use Contracts and Sustained Use Discounts: Google Cloud offers discounts for committing to use specific resources over a period (one or three years) and automatic sustained use discounts for consistent usage of certain resources. These options help in reducing overall cloud costs significantly.

- Resource Management and Tagging: Implementing a robust tagging strategy in Google Cloud allows for detailed cost tracking and allocation. Tags can be used to categorize resources by project, team, or department, facilitating more accurate financial reporting and accountability.

Google Cloud Recommender: This tool provides actionable recommendations to optimize your Google Cloud environment. It includes suggestions for rightsizing VMs, removing unused resources, and optimizing storage, helping to reduce costs without impacting performance.

Learn more in the in-depth guide to cloud cost management

Related technology updates:

- [Webinar] Driving a Successful Company-Wide FinOps Cultural Change

- [Webinar] Mastering Kubernetes Spend-Efficiency

Top Cloud Computing Providers

General Purpose Cloud Providers

- Amazon Web Services—AWS was the first major IaaS provider in 2008, and is today the leading provider of public cloud computing. It provides a complete computing stack that enables organizations to deploy almost any combination or software and hardware infrastructure.

- Microsoft Azure—Microsoft Azure is the world’s second largest cloud provider. It is the obvious choice for hosting Microsoft-based systems on the cloud, and is a common choice for government agencies. It also runs an increasing number of non-Microsoft workloads, including Linux, HPC, and SAP systems.

- Google Cloud—offers a more limited set of services compared to AWS and Azure, but competes on price and provides the Anthos Platform, which makes it easier to create multi cloud solutions and avoid vendor lock in.

- IBM Cloud—a group of enterprise cloud computing services developed by IBM. Includes IaaS, SaaS, and PaaS solutions supporting public, private, and hybrid cloud models.

- Alibaba Cloud—the leading cloud provider in China, which is forging alliances to become a key player across Asia.

- Hewlett Packard Enterprise—Hewlett Packard Enterprise’s hybrid cloud strategy focuses on its hardware stack, including Aruba wireless networking and edge computing solutions, and software platforms like Greenlake, SimPVT, and Synergy. HPE has partnerships with Red Hat, VMware, and the major cloud providers.

- Oracle Cloud—Oracle’s public cloud has a competitive advantage in IoT, OLTP, microservices, artificial intelligence and machine learning. It provides its popular database software as IaaS and PaaS offerings, and also provides the Oracle Data Cloud, which enables big data analytics for business data.

- Dell Technologies/VMware—Dell acquired VMware and is using it to provide a true multi cloud offering. VMware is today fully container based, and enables companies to run the same workloads on leading public clouds like Amazon, Azure and Google Cloud, as well as on premises. VMware complements its cloud offering with its acquisition of CloudHealth, a cloud cost management solution.

Related product offering: Finout | Enterprise-Grade FinOps Platform

Cloud-Native Analytics Platforms

Cloud-native analytics platforms are designed to run entirely in the cloud, offering scalable, flexible, and efficient solutions for data storage, processing, and analysis. These platforms separate compute and storage resources, enabling independent scaling and cost optimization. They support various data types and provide advanced analytics capabilities, including real-time processing and machine learning integration.

Here are some leading cloud-native analytics platforms:

- Snowflake: A cloud-based data warehouse that separates compute and storage, allowing for independent scaling. Supports structured and semi-structured data formats like JSON and Parquet. Offers features like automatic scaling, data sharing, and support for multiple cloud providers. Utilizes ANSI SQL for querying and provides near-zero maintenance. Learn more in the in-depth guide to Snowflake pricing.

- Amazon Redshift: A fully managed, petabyte-scale data warehouse service by AWS. Employs columnar storage and parallel processing to handle large datasets efficiently. Integrates with AWS services like S3 and supports querying data directly from S3 using Redshift Spectrum. Offers features like concurrency scaling and materialized views.

- Google BigQuery: A serverless, highly scalable data warehouse that enables super-fast SQL queries using the processing power of Google’s infrastructure. Automatically handles resource provisioning and scaling. Supports real-time analytics and integrates with various Google Cloud services. Pricing is based on the amount of data processed per query.

- Azure Synapse Analytics: An analytics service that brings together big data and data warehousing. Allows querying data using serverless on-demand or provisioned resources. Integrates with Azure services like Power BI and Azure Machine Learning. Supports both structured and unstructured data and provides a unified experience for ingesting, preparing, managing, and serving data.

- Databricks: A unified data analytics platform built on Apache Spark. Combines data engineering, data science, and machine learning workflows. Supports various data formats and provides collaborative notebooks for data exploration. Offers features like Delta Lake for reliable data lakes and MLflow for managing the machine learning lifecycle.

IaaS Pricing: AWS vs Azure vs Google Cloud

The following table will help you understand the basic pricing model offered by the big three cloud providers.

| Price Parameter | AWS | Azure | Google Cloud |

| Compute Instances |

|

||

| Reserved Instances | Commit to 1-3 years with three payment options: upfront, partial payment and the balance monthly, or monthly payment | Commit to 1-3 years and pay balance upfront | Offers a discount for commitment to 1 or 3 years, with monthly payments |

| Spot Instances | Bid for unused EC2 capacity at a potentially lower price. Prices fluctuate based on supply and demand.

Instances can be terminated by AWS with a two-minute notification if demand rises or spot price exceeds the bid. |

Bid for unused Azure compute capacity at reduced prices. Prices can change based on availability and demand.

Virtual Machines can be evicted based on capacity or the set max price. |

Offered at a lower fixed price than standard VMs. Can be terminated by Google Cloud if resources are needed elsewhere.

Designed for batch jobs and fault-tolerant workloads; run for up to 24 hours. |

| Object Storage—Frequent Access | Basic rate for first 50 TB, discounts for 51-500 TB and over 500 TB | Basic rate for first 50 TB, discounts for 51-500 TB and over 500 TB | Flat rate per GB per month |

| Object Storage—Infrequent Access | Three tiers: Infrequent access, One Zone Infrequent Access, Archive Storage | Two tiers: Infrequent access, Archive storage | Two tiers: Nearline storage, Coldline storage |

| Block Storage | Two tiers: HDD, SSD, and free tier up to 30GB | Two tiers: HDD, SSD | Offers standard local/regional volumes, SSD local/regional volumes, multi-regional snapshot storage |

| Rating Frequency | Per-Hour for most services, Per-Second for EC2 and Reserved instances | Per-Hour for most services, Per-Second offered for Windows VMs and Container instances | Per-Second pricing for all services |

| Official Pricing Information | Official pricing Cost calculator |

Official pricing Cost calculator | Official pricing Cost calculator |

Learn more in our guides comparing cloud services pricing:

Related technology updates: [Report] GigaOm 2024 Radar for Cloud Resource Optimization

Learn more in our detailed guide to: AWS EC2 Pricing

Related technology updates:

Learn more in our detailed guide to: Cloud Cost

Related product offering: Spot Eco | Cloud Commitments and Cost Management

What Is IoT in the Cloud?

The Internet of Things, or IoT, refers to the network of physical devices connected to the internet, all collecting and sharing data. When we talk about IoT in the cloud, we’re referring to using cloud computing to process and store the data collected by these devices.

IoT in the cloud allows businesses to improve efficiency and make more informed decisions. By using cloud-based analytics, companies can extract valuable insights from vast amounts of data generated by IoT devices. These insights can then be used to drive business growth and innovation.

Moreover, cloud-based IoT platforms can handle the massive influx of data from various devices and sensors in real-time. They provide the necessary scalability and storage capacity to manage this data effectively. Also, cloud-based IoT solutions offer advanced security features to ensure that the data remains protected from potential threats.

IaaS High Availability

High availability is an important principle of cloud computing. This is especially important for mission critical systems where downtime due to business interruptions is unacceptable. Downtime can hurt productivity and lead to financial losses.

IaaS services are known for their ability to provide a high level of redundancy, spreading applications across multiple physical machines in different locations. They can also provide auto scaling, a mechanism that allows systems to automatically scale up to additional machines on the cloud when loads increase.

AWS High Availability Architecture

Amazon Web Services has built a massive global infrastructure to provide high availability and flexibility for customer workloads.

Amazon offers cloud services in 24 regions (see the map of the Amazon regions). Amazon defines a region as a geographic area with at least three different data centers known as availability zones (AZs).

Each AWS availability zone is a fully localized infrastructure with redundant power supplies, networks, and Internet connectivity. Currently, Amazon supports 77 Availability Zones worldwide. Each AZ typically has three or more data centers in one location, separated by a “meaningful distance” of up to 100 km. This ensures a physical disaster is unlikely to take down all data centers in the AZ, and yet enables high-speed connections between the data centers.

Learn more in the in-depth guide to AWS auto scaling.

Azure High Availability Architecture

Like AWS, Azure also bases its high availability architecture on regions and availability zones. Azure always stores three copies of user data across three availability zones. This is called redundant local storage. Customers can opt for global redundant storage, to create up to three additional copies of their data in a “paired region”, a nearby region that has fast connectivity with the first region, for added flexibility.

Azure availability zones achieve high availability by distributing resources across multiple data centers in a customer’s region. Azure provides additional services like Azure Site Recovery and Azure Backup to achieve the required recovery point objective (RPO) and recovery time objective (RTO) for their applications.

Google Cloud SQL High Availability Architecture

In Google Cloud, resources that operate in one zone are called “zonal resources”. Other resources operate across an entire region and are called “regional resources”. For example, a Google Cloud virtual machine instance or persistent disk is a zonal resource, while a static IP address is a regional resource.

Google adds the concept of clusters—clusters are groups of physical computers inside a physical data center, with independent power, cooling, networking, and security infrastructure. This allows Google Compute Engine to balance customer resources across clusters in the same zone, while retaining high connectivity between the physical machines in each cluster.

AWS CloudFormation

Amazon Web Services (AWS) CloudFormation is a service that helps you automate the process of creating and managing infrastructure resources in the AWS Cloud. It allows you to use a template to create and provision resources in your AWS account. This template is written in JSON or YAML, and it specifies the resources and their properties that you want to create.

CloudFormation allows you to create, update, and delete resources in a predictable and automated way. It helps you standardize and automate the way you create and manage resources, making it easier to manage and maintain your infrastructure. CloudFormation also provides versioning for your templates, so you can track changes to your infrastructure over time.

You can use CloudFormation to create and manage resources such as Amazon EC2 instances, Amazon S3 buckets, and Amazon Virtual Private Clouds (VPCs). You can also use CloudFormation to create custom resources, using AWS Lambda functions or other AWS services.

Learn more in the in-depth guide to: AWS CloudFormation.

Related product offering: Faddom | Instant Application Dependency Mapping Tool

Related technology updates: [Announcement] AWS Well Architected Reviews with CloudCheckr

AWS IaaS Services

Amazon S3

Amazon Simple Storage Service (S3) is the first and most popular Amazon service, which provides object storage at unlimited scale. S3 is easy to access via the Internet and programmatically via API, and is integrated into a wide range of applications. It provides 11 9’s of durability (99.999999999%), and offers several storage tiers, allowing users to move data that is used less frequently into a low-cost archive tier within S3.

AWS EC2

Amazon Elastic Compute Cloud (Amazon EC2) offers scalable computing resources. It lets you run as many virtual servers as you want, configure your network and security, and manage storage. You can increase or decrease resources on-demand according to changing business requirements, and set up auto scaling to scale resources up and down according to actual workloads.

AWS EBS

Amazon Elastic Block Store (Amazon EBS) is a block-level storage service for use with Amazon EC2 instances. When mounted on an Amazon EC2 instance, you can use Amazon EBS volumes like any other raw block storage device. It can be formatted and mirrored for specific file systems, host operating systems, and applications.

AWS EFS

Amazon Elastic File System (Amazon EFS) provides a simple, scalable, and fully managed elastic NFS file system for use with AWS cloud services and on-premises resources. It can support up to petabytes of data, automatically scaling as files are added and removed, eliminating the need to configure and manage storage capacity.

AWS EMR

Amazon Elastic MapReduce (EMR) is a cloud-based big data platform for processing vast amounts of data using common open-source tools such as Apache Spark, HBase, Presto, and Flink. EMR is used for data analysis, machine learning, financial analysis, scientific simulation, and bioinformatics.

Customers can create EMR clusters using large or small instances depending on their compute and memory requirements. EMR integrates with AWS data stores such as Amazon S3 and DynamoDB, allowing you to analyze and visualize your data with business intelligence tools.

AWS Lambda

AWS Lambda is a serverless, on-demand IT service that provides developers with a fully managed, event-driven cloud system that executes code. AWS Lambda uses Lambda functions—anonymous functions that are not associated with identifiers—enabling users to package any code into a function and run it, independently of other infrastructure.

AWS FSx

Amazon FSx is a fully-managed service that lets you launch, run, and scale high-performance file systems in the AWS cloud. AWS handles management tasks such as hardware provisioning, backups, and patching. The underlying infrastructure powering this service consists of the latest AWS networking, compute, and disk technologies.

AWS FSx offers various capabilities delivered as a reliable, secure, and scalable cloud service that achieves high performance and lower TCO. The service lets you choose a file system to support your storage, including NetApp ONTAP, Lustre, and Windows File Server. FSx

provides full access to all feature sets, data management capabilities, and performance profiles.

AWS ECS

AWS Elastic Container Service (ECS) is a highly scalable, high-performance container orchestration service that supports Docker containers and allows you to easily run and scale containerized applications on AWS. ECS eliminates the need for you to install, operate, and scale your own cluster management infrastructure. With simple API calls, you can launch and stop Docker-enabled applications, query the complete state of your application, and access many familiar features like security groups, Elastic Load Balancing, EBS volumes, and IAM roles.

ECS can be used in conjunction with AWS Fargate, a compute engine for Amazon ECS that allows you to run containers without having to manage servers or clusters. With AWS Fargate, you no longer have to provision, configure, and scale clusters of virtual machines to run containers. This removes the need to choose server types, decide when to scale your clusters, or optimize cluster packing.

AWS Fargate

AWS Fargate is a serverless compute engine for containers that works with both Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS). Fargate enables you to focus on building and deploying applications without the complexity of managing the underlying infrastructure. It allocates the right amount of compute, eliminating the need to choose instances and scale cluster capacity.

Fargate is designed for applications that require a balance of compute, memory, and networking resources and integrates with AWS services to provide a secure and efficient container execution environment. Pricing is based on the actual compute and memory resources used by the containerized application, offering a pay-as-you-go model that can lead to cost savings. However, in general Fargate is more expensive compared to EC2, for the same computing capacity.

AWS EKS

AWS Elastic Kubernetes Service (EKS) is a managed service that makes it easier for users to run Kubernetes on AWS without needing to install, operate, and maintain their Kubernetes control plane or nodes. Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications.

EKS runs the Kubernetes management infrastructure across multiple AWS availability zones, automatically detecting and replacing unhealthy control plane nodes, and providing on-demand, zero-downtime upgrades and patching.

AWS Bedrock

AWS Bedrock is a fully managed service that allows developers to build and scale generative AI applications using foundation models from various AI providers. It eliminates the need to manage underlying infrastructure and provides seamless integration with AWS services.

Bedrock supports multiple foundation models, enabling users to choose the best model for their use case, whether for text generation, image creation, or chatbot applications. It also offers fine-tuning capabilities and responsible AI tools to help businesses deploy AI solutions securely and ethically.

Learn more in the in-depth guide to AWS Bedrock

Related product offering: Anodot | Intelligent Cost Optimization Platform

Related technology updates:

AWS Secrets Manager

AWS Secrets Manager is a managed service that helps you securely store, manage, and retrieve sensitive information such as database credentials, API keys, and other secrets. It eliminates the need for hardcoding secrets in application code by providing automatic retrieval and rotation.

Secrets Manager integrates with AWS Identity and Access Management (IAM) and AWS Key Management Service (KMS) to enforce security best practices. It also provides audit logs via AWS CloudTrail, helping organizations meet compliance requirements.

Learn more in the in-depth guide to AWS Secrets Manager

Related product offering: Configu: Configuration Management Platform | Configuration-as-Code Automation for Secure Collaboration

Related technology update: [Blog] The state of config file formats: XML vs. YAML vs. JSON vs. HCL

AWS SNS

Amazon Simple Notification Service (SNS) is a fully managed messaging service that enables the delivery of notifications between distributed systems, microservices, and applications. It supports multiple messaging protocols, including SMS, email, HTTP/S, and AWS Lambda.

SNS allows developers to implement pub/sub messaging architectures, where messages are published to topics and then distributed to multiple subscribers. It integrates with AWS services like SQS and CloudWatch, enabling event-driven architectures at scale.

AWS CloudWatch

Amazon CloudWatch is a monitoring and observability service that provides real-time insights into AWS resources, applications, and services. It collects and analyzes metrics, logs, and events, helping users detect anomalies, troubleshoot issues, and optimize performance.

CloudWatch includes features like alarms, dashboards, and automated actions. It integrates with AWS Lambda, EC2, ECS, and other services to enable proactive monitoring and automated responses to infrastructure events.

AWS Auto Scaling

AWS Auto Scaling monitors your applications and automatically adjusts capacity to maintain steady, predictable performance at the lowest possible cost. It provides a powerful interface that lets you build scaling plans for resources including Amazon EC2 instances and Spot Fleets, Amazon ECS tasks, Amazon DynamoDB tables and indexes, and Amazon Aurora Replicas.

AWS Auto Scaling lets you optimize costs and improve performance. It makes it possible to set scaling policies and set scaling targets manually, or have these created automatically based on an analysis of application loads.

Learn more in the in-depth guide to AWS autoscaling

Related product offering: Spot Elastigroup | Spot Instance Automation

Related technology update: [Blog] Horizontal vs. Vertical Scaling

Azure IaaS Services

Linux Virtual Machines in Azure

Traditionally Azure focused on Windows virtual machines, but now has a robust offering for Linux users as well. Azure virtual machines (VMs) are scalable on-demand compute resources provided by Azure.

Microsoft Azure supports popular Linux distributions deployed and managed by multiple partners. Linux machine images are available in the Azure Marketplace for the following Linux distributions (more distributions are added on an ongoing basis):

- FreeBSD

- Red Hat Enterprise

- CentOS

- SUSE Linux Enterprise

- Debian

- Ubuntu

- CoreOS

- RancherOS

Azure Files

Azure Files is a cloud file storage service that provides access to server message block (SMB) file shares. These shares can be configured as part of an Azure storage account. Azure Files enables cloud-based virtual machines and on-premise applications to share files using standard protocols.

Azure Managed Disk

Azure managed disks are block-level storage volumes managed by Azure and used by Azure virtual machines. A managed disk is similar to a physical disk on a local server, but it is virtualized. For managed disks, you only need to specify the disk size and disk type, and provision—Azure does the rest. The available hard drive types are:

- Standard hard disks (HDD)

- Standard SSD

- Premium SSDs

- Ultra disks—optimized for sub-millisecond latency

Azure Blob Storage

Azure Blob Storage is Microsoft’s object storage service, similar to Amazon S3. Blob storage is suitable for storing large amounts of unstructured data. Blob storage offers sixteen 9’s of durability, and advanced security features including RBAC, encryption at rest and advanced threat protection. IT also supports lifecycle management and immutable storage (WORM), which can help protect against data loss and threats like Ransomware.

HPC on Azure

Azure provides high performance computing (HPC) resources, which you can deploy purely on the public cloud, or combine with local HPC resources to create a hybrid HPC deployment. Azure provides an HPC head node which is used to schedule jobs and workloads, and a virtual machine scale set, with large numbers of VMs that can be used to run massively parallel workloads. These VMs can include both CPU and GPU hardware, depending on the type of processing required.

Learn more in the in-depth guide about HPC on Azure

SAP on Azure

A large variety of SAP applications can be deployed to Azure, using predefined virtual machines created and certified by SAP.

SAP HANA

You can run the SAP HANA in-memory database on Azure, using M-series VMs that scale up to 4TB memory, certified for use with SAP HANA. Another option is Mv2 VMs, the largest SAP HANA certified VMs in the public cloud, with 6TB of memory. Azure offers a service level agreement (SLA) of 99.99% for instances in high availability pairs, and 99.9% for standalone instances.

SAP S/4HANA

You can deploy SAP S/4HANA on Azure, with remote connection via Azure ExpressRoute for Fiori applications. Azure provides an SLA of 99.99% SLA if you run S/4HANA in two Azure availability zones. It also provides backup and recovery in second, even for databases with multiple TBs of data.

VDI on Azure

Microsoft Virtual Desktop Infrastructure (VDI) offers multi-tenant support for Windows 10 and a Windows Virtual Desktop license. Azure provides the FSLogix configuration file container, which decouples user configuration files from the underlying operating system. Azure recently launched MSIX AppAttach, which allows you to package a Win32 application in an MSIX application container.

Google Cloud IaaS Services

Google Cloud Storage

Google Cloud Storage is an object storage service by Google Cloud. It provides features like object versioning and extended permissions (per item or bucket). Google Cloud offers two archive storage tiers with lower pricing and fast retrieval times, called Nearline and Coldline.

Google Cloud Filestore

Google Cloud Filestore uses NFS version 3 and is designed for workloads requiring low latency and minimal performance fluctuations. This service has two levels of performance: standard and premium. The premium tier can support very high performance—700 Mbps for reads, 350 Mbps for writes, and a maximum of IOPS of 30,000.

Google Persistent Disk

In Google Cloud, a Persistent Disk is a storage device that you can access from a virtual machine, like a physical hard drive. The data is spread across multiple physical hard drives in the Google data center. Google Compute Engine manages the distribution of data for optimal redundancy and performance.

Adopting IaaS: Cloud Migration Strategies

Following are the most common approaches to cloud migration, taken from the influential “5 Rs” model proposed by Gartner.

Rehosting

Re-hosting (also known as “lift and shift”) is the fastest way to move your application to the cloud. This is usually the first approach taken in a cloud migration project because it allows moving the application to the cloud without any changes. Both physical and virtual servers are migrated to infrastructure as a service (IaaS). Lift and shift is commonly used to improve performance and reliability for legacy applications.

Replatforming, Refactoring, or Re-architecture

This migration strategy involves detailed planning and a high investment, but it is the only strategy that can help you get the most out of the cloud. Applications that undergo replatforming or re-architecture are completely rebuilt on cloud-native infrastructure. They scale up and down on-demand, are portable between cloud resources and even between different cloud providers.

Repurchasing

In most cases, repurchasing is as easy as moving from an on-premise application to a SaaS platform. Typical examples are switching from internal CRM to Salesforce.com, or switching from internal email server to Google’s G Suite. It is a simple license change, which can reduce labor, maintenance, and storage costs for the organization.

Retire

When planning a move to the cloud, it often turns out that part of the company’s IT product portfolio is no longer useful and can be decommissioned. Removing old applications allows you to focus time and budget on high priority applications and improve overall productivity.

Retain

Moving to the cloud doesn’t make sense for all applications. You need a strong business model to justify migration costs and downtime. Additionally, some industries require strict compliance with laws that prevent data migration to the cloud. Some on-premises solutions should be kept on-premises, and can be supported in a hybrid cloud migration model.

Now that we’ve covered some of the general strategies for migrating workloads to the cloud, let’s dive deeper into specific best practices for migrating to each of the big three cloud providers: AWS, Azure, and Google Cloud

AWS Migration Best Practices

Leverage AWS Tools

AWS offers a wide range of tools designed for the migration process, from the initial planning phase to features for post-migration. Here are several useful tools to consider:

- AWS Migration Hub – a dashboard that centralizes data and helps you monitor and track the progress of migration.

- AWS Application Discovery – collects data needed for pre-migration due diligence.

- TSO Logic – offers data-driven recommendations based on predictive analytics. The recommendations are tailored to help during the planning and strategizing phase.

- AWS Server Migration Service – provides automation, scheduling, and tracking capabilities for incremental migrations.

- AWS Database Migration Service – keeps the source data store fully-operational while the migration is in process, to minimize downtime.

Amazon S3 Transfer Acceleration – improves the speed of data transfers made to Amazon S3, to maximize available bandwidth.

Automate Repetitive Tasks

The migration process typically involves many repetitive tasks. You can perform these tasks manually, and you can automate them. The main purpose of automation is to enable you to achieve a higher level of efficiency while reducing costs. In many cases, automation can also help you complete tasks much faster than manually possible.

Outline and Share a Clear Cloud Governance Model

A cloud governance model defines and specifies the practices, roles, responsibilities, tools, and procedures involved in the governance of your cloud environments. Your model needs to be as clear as possible, to ensure all relevant stakeholders understand how cloud resources should be managed and used. Ideally, you should define this information before migrating.

Here are several questions your cloud governance model should answer:

- What controls are set in place to meet security and privacy requirements?

- How many AWS accounts are maintained?

- What privileges are enabled for each role?

There are many more considerations to address in your cloud governance model, depending on your industry and business needs. Be sure to keep your documentation flexible to allow for change and optimization after the migration process is completed and your workloads settle in the new cloud environment.

Azure Migration Best Practices

Azure Migration Tools

Azure offers several migration tools designed to simplify and automate the migration process. Here are three commonly used Azure migration tools:

- Azure Migrate—helps you to assess your local workloads, determine the required size of cloud resources, and estimate cloud costs.

- Microsoft Assessment and Planning—helps you discover your servers and applications and build an inventory. Additionally, this tool can create reports that determine whether Azure can support your workloads.

- Azure Database Migration Service—helps you migrate on-premise SQL Server workloads to Azure.

Cost Management in Azure

Cloud resources are highly accessible and flexible, but costs can quickly skyrocket if you don’t have a cost management strategy in place. Here are several tools and techniques you can use to manage your cloud costs:

- Tag your resources – to manage costs, you need visibility into cloud resource consumption. You can set this up by tagging resources and monitoring them. Be sure to use standard tags and keep this organized.

- Use policies – to automate tagging and monitoring. Cloud resources are highly scalable and this can make manual tagging and monitoring incredibly time consuming. Use policies to standardize the process and automation to enforce these rules.

You can leverage either third-party and first-party tools for tagging. There are also tools dedicated to cost management and optimization and monitoring. In addition, you can set up role-based access control (RBAC) to ensure resources are properly used by authorized users, and set up several resource groups.

Review Every Policy and Procedure

Policies and procedures are a foundational component of the migration process and heavily impact the success of the implementation. To ensure your migration runs smoothly, you should define and review all policies and then apply them in a cohesive and standardized manner.

Properly implementing security can ensure all required security measures are set in place. Policies are not only responsible for enforcing security, but also help you achieve and maintain compliance. Data encryption, for example, is a component you can enforce using a policy.

Once you define your policies and procedures, you should test them before running in production. You can automate this process using several tools. Azure Migrate, for example, can help you automatically identify, assess, and migrate your local VMs to the Azure cloud.

Google Cloud Migration Best Practices

Moving Data

Here are several aspects to consider when migrating to Google Cloud:

- Move your data first – and then move the rest of the application. This is recommended by Google.

- Choose the relevant storage – Google Cloud offers several tiers for hot and warm storage, as well as several archiving options. You can also leverage SSDs and hard discks, or choose a cloud-based database service, such as Bigtable, Datastore, and Google Cloud SQL.

- Plan the data transfer process – determine and define how to physically move your data. You can, for example, send your offline disk to a Google data center or opt to stream to persistent disks.

Moving Applications

There are several ways to migrate applications, depending on the application’s suitability to the cloud. In some cases, you might need to re-architect the entire application before it can be moved to the cloud. In other cases, you might need to do light modification before the migration. Ideally, when possible, your application can be lifted and shifted to the cloud.

A lift and shift migration means you do not need to make any changes to your application. You can lift it and move it directly to the new cloud environment. For example, you can create a local VM within your on-premise center, and then import it as a Google VM. Alternatively, you can backup your application to GCP – this option lets you automatically create a cloud copy.

Optimize

After the migration process is complete and your application is safely hosted in the cloud, you need to set up measures that help you continuously optimize your cloud environment. Here are several tools offered by Google:

- Google Cloud operations suite (Stackdriver) – provides features that enable full observability into your Google cloud environment. The information is centralized in a single database that lets you run queries and leverage root-cause analysis to gain detailed insights.

- Google Cloud Pub/Sub – helps you set up communication between any independent applications. You can use Pub/Sub to rapidly scale, decouple applications, and improve performance.

- Google Cloud Deployment Manager – lets you automate the configuration of your applications. You specify the requirements and Deployment Manager automatically initiates the deployments.

Running Mission Critical Applications in the Cloud

A mission-critical application relies on continuous availability and cannot undergo even a brief downtime. This can lead to financial, reputational, and operational damages to an entire business or a segment.

When provisioning a mission critical application, you need to ensure stability and availability at all times. You can achieve this by creating redundant copies of your application and hot backups. Additionally, you can duplicate your production and staging environments and test them.

Large enterprises typically use the following three types of mission critical applications:

- Backup and disaster recovery – strategies are critical to ensure business continuity. Your recovery strategy should provide a short recovery time objective (RTO) and minimize the recovery point objective (RPO).

- Enterprise Resource Planning (ERP) – systems manage business processes, providing capabilities to manage finances, manufacturing, distribution, the supply chain, human resources, and more. ERPs must remain operational at all times.

- Virtual Desktop Infrastructure (VDI) – solutions help you remotely deliver a desktop image to endpoint devices via an Internet network. VDI technology enables users to access mission critical applications on their smartphones, laptops, and other thin-client devices.

Traditionally, mission-critical applications are hosted on-premises, but today many of these applications are moving to cloud environments. The cloud can provide enterprises with a high level of flexibility and scalability. Ideally, if cloud resources are properly utilized and optimized, enterprises can significantly reduce their costs by moving to the cloud.

There are, however, several challenges enterprises face when migrating their mission-critical applications to the cloud. The migration process is a major challenge for many enterprises. The process itself can take time, for one, and comes with a unique set of risks. For many enterprises, security and compliance are critical and must be maintained at all times.

The cost of migration and unforeseen overhead can also run high. However, it is possible to address these challenges. To successfully migrate mission critical applications, enterprises can leverage techniques and solutions designed for the migration process. Planning the migration is especially helpful to minimize overhead and ensure known challenges are addressed in advance.

Deep Learning in the Cloud

Deep learning is at the center of most artificial intelligence (AI) initiatives. It is based on the concept of a deep neural network, which passes inputs through multiple layers of connections. Neural networks can perform many complex cognitive tasks, improving performance dramatically compared to traditional machine learning algorithms. However, they often require huge data volumes to train and can be very computationally intensive.

Deep learning is often a time-consuming and costly endeavor, especially regarding training models. Many factors can impact the process, but processing power is critical to ensure the pipeline works effectively. Graphics processing units (GPUs) provide the processing power needed for computationally intensive operations, but setting this up is not affordable for all organizations.

Cloud computing vendors provide various services to help make deep learning more affordable and accessible. Services may vary between vendors, but most can help you manage large datasets and train algorithms on distributed hardware. Here are notable offerings from the top cloud vendors – Amazon Web Services (AWS), Microsoft Azure, and Google Cloud:

AWS

AWS provides four instance options, available in multiple sizes. These include EC2 P2, P3, G3, and G4 instances. With these instances, you can choose to access NVIDIA Tesla M60, T4 Tensor, K80, or V100 GPUs and can include up to 16 GPUs per instance.

With AWS, you also have the option of using Amazon Elastic Graphics. This service enables you to connect your EC2 instances to various low-cost GPUs. You can attach GPUs to any instance compatible for greater workload flexibility. The Elastic Graphics service also provides up to 8GB of memory and supports OpenGL 4.3.

Azure

Azure provides several choices for GPU-based instances. These instances are designed for high computation tasks, including deep learning, simulations, and visualizations.

In Azure, you can choose from three instance series:

- NC-series—optimized for compute and network-intensive workloads. These instances can support OpenCL and CUDA-based applications and simulations. Available GPUs include NVIDIA Tesla V100, Intel Broadwell, and Intel HaswellGPUs.

- NV-series—optimized for visualizations, encoding, streaming and virtual desktop infrastructures (VDI). These instances support OpenGL and DirectX. Available GPUs include AMD Radeon Instinct MI25 and NVIDIA Tesla M60 GPUs.

- ND-series—optimized for deep learning training scenarios and inference. Available GPUs include NVIDIA Tesla P40, Intel Skylake, and Intel Broadwell GPUs.

Google Cloud

Although Google Cloud doesn’t offer dedicated instances with GPUs, it does enable you to connect GPUs to existing instances. This works with standard instances and Google Kubernetes Engine (GKE) instances. It also enables you to deploy node pools, including GPUs. Support is available for NVIDIA Tesla V100, P4, T4, K80, and P100 GPUs.

Another option in Google Cloud is access to TensorFlow processing units (TPUs). These units are made of multiple GPUs. TPUs are designed to perform matrix multiplication quickly and can provide performance similar to Tensor Core enabled Tesla V100 instances. Currently, PyTorch provides partial support for TPUs.

Kubernetes in the Cloud

Kubernetes on AWS

Kubernetes lets you use existing on-premises and cloud-based tools to run containerized applications. It can manage clusters of AWS EC2 instances, deploying, running, maintaining, and scaling containers on EC2 instances.

AWS helps you easily manage Kubernetes infrastructure with Amazon EC2 or employ Amazon EKS for automatic provisioning and management. You can also leverage community-backed integrations to AWS services, such as IAM, VPC, and service discovery.

Kubernetes on Azure

Azure Kubernetes Service (AKS) enables you to deploy a managed Kubernetes cluster in the Azure cloud. AKS is a hosted Kubernetes service that reduces the operational overhead and complexity of managing Kubernetes by managing these responsibilities. Azure handles health monitoring and maintenance and manages Kubernetes masters, so you can focus on managing and maintaining agent nodes.

Kubernetes on Google Cloud

Google Kubernetes Engine (GKE) is an orchestration system for Docker containers and container clusters running in Google’s public cloud. It is based on Kubernetes, which Google originally developed for container management. You can use the gcloud CLI or the Google Cloud Platform Console to interact with this service.

GKE employs a group of instances to run Kubernetes. It allocates a master node to manage a cluster of Docker containers and runs a Kubernetes API server that interacts with the cluster and performs various tasks, including scheduling containers. A cluster may also include one or more additional nodes that run a Docker runtime and kubelet agent to manage containers.

See Additional Guides on IaaS Topics

What Is Cloud Hosting

Related guides

Authored by Atlantic.Net

Related product offering: Atlantic.Net Dedicated Server Hosting | High Performance Dedicated Servers

Offered by Atlantic.Net

- Managed Private Cloud Hosting

- Atlantic.Net Orlando Colocation Data Center

- Server Management Services

Related technology updates:

What Is Dedicated Server Hosting

Related guides

Authored by Atlantic.Net

- What Is Dedicated Server Hosting: Benefits, Types, and Costs

- GPU Dedicated Server Hosting: A Buyer’s Guide

Related product offering: Atlantic.Net Dedicated Server Hosting | High Performance Dedicated Servers

Offered by Atlantic.Net

Related technology updates:

- [Blog] How to Secure SSH Server on Arch Linux

- [Blog] 9 Essential Features of Dedicated Server Hosting

Hybrid Cloud

Related guides

Authored by Faddom

- Hybrid Cloud: Architecture, Use Cases & 5 Critical Best Practices

- Hybrid Cloud Architecture: Is It Right for Your Business?

- Hybrid Cloud with AWS: 5 Tools and a Reference Architecture

Related product offering: Faddom | Instant Application Dependency Mapping Tool

Related technology updates: [Report] Optimizing in a Multi-Cloud World

Infrastructure as Code

Related guides

Authored by Codefresh

- Infrastructure as Code: Benefits, Platforms & Tips for Success

- Infrastructure as Code on AWS: Process, Tools & Best Practices

- Why You Need Immutable Infrastructure and 4 Tips for Success

Related product offering: GitOps Software Delivery Platform

Offered by Codefresh

- CI/CD Platform with GitOps

- Enterprise Support for Argo

- Secure Distribution for Argo CD and Argo Rollouts

Related technology update: [Blog] How to Model Your GitOps Environments and Promote Releases between Them

Load Balancer

Related guides

Authored by Radware

- What is a Load Balancer? History, Key Functions, Pros and Cons

- What is Global Server Load Balancing (GSLB)?

Related product offering: Application Delivery and Security

Offered by Radware

AWS Cloudformation

Related guides

Authored by Faddom

Related product offering: Instant Application Dependency Mapping Tool

Offered by Faddom

Related technology updates: [Announcement] AWS Well Architected Reviews with CloudCheckr

Cloud Computing Costs

Related guides

Authored by Faddom

- AWS EC2 Pricing: A Beginners Guide

- Azure VMs Pricing Beginners Guide

- Cloud Computing Costs & Pricing Comparison for 2023

Related product offering: Instant Application Dependency Mapping Tool

Offered by Faddom

Related technology updates: [Report] GigaOm 2024 Radar for Cloud Resource Optimization

Cloud Cost Management

Related guides

Authored by Finout

- Why Cloud Cost Management, 5 Tools to Know, and Tips for Success

- What Is IT Financial Management (ITFM) and 5 Steps to Adoption

- Cloud Storage Pricing – Updated for 2025

Related product offering: Enterprise-Grade FinOps Platform

Offered by Finout

Related technology updates:

- [Webinar] Driving a Successful Company-Wide FinOps Cultural Change

- [Webinar] Mastering Kubernetes Spend-Efficiency

FinOps

Related guides

Authored by Finout

- Cloud FinOps: Ultimate Guide to Principles, Tools & Practices

- AWS FinOps: Why, How, and 6 Tools to Get You Started

- FinOps: Principles and Lifecycle Guide

Related product offering: Enterprise-Grade FinOps Platform

Offered by Finout

AWS Cost Optimization

Related guides

Authored by Finout

- AWS Cost Optimization: 6 Free Tools & 10 Hacks to Cut AWS Bills

- What Are AWS Spot Instances, Pros/Cons, and 6 Ways to Save Even More

- Top 5 Free & Open Source AWS Cost Optimization Tools

Related product offering: Enterprise-Grade FinOps Platform

Offered by Finout

Related technology update: [Webinar] How To Create a Cost-Effective AWS Environment

Snowflake Pricing

Related guides

Authored by Seemore Data

- Complete Guide to Understanding Snowflake Pricing

- Snowflake Storage Costs: The Complete Guide for 2024

CloudHealth

Related guides

Authored by Finout

- VMware CloudHealth: Solution Overview, Pros/Cons & Alternatives

- CloudHealth Pricing Tiers and Contract Structure Explained

- CloudHealth Competitors to Watch in 2025

Related product offering: Enterprise-Grade FinOps Platform

Offered by Finout

- Databricks Cost Optimization & Cost Management Tool

- Kubernetes Cost Monitoring & Management Tool: K8s Cost Reduction

Digital Asset Management

Related guides

Authored by Cloudinary

- Digital Asset Management (DAM) Users, Features, and Benefits

- Reimaging DAM: The next-generation solution for marketing & development

- MAM vs ECM: What’s the Difference?

Related product offering: Cloudinary Assets | AI-Powered Digital Asset Management

Related technology update:

[Blog] New for DAM: Media Library Extension for Chrome

[Blog] Best Image Optimization Techniques: Expert Roundup

Learning Management System

Related guides

Authored by Kaltura

- Learning Management Systems (LMS): 6 Key Features and Top Use Cases

- Corporate Learning Management System: 11 Solutions to Know in 2025

- Healthcare learning management system: Challenges & best practices

AWS Secrets Manager

Related guides

Authored by Configu

Related product offering: Configu: Configuration Management Platform | Configuration-as-Code Automation for Secure Collaboration

Related technology update: [Blog] The state of config file formats: XML vs. YAML vs. JSON vs. HCL

AWS Bedrock

Related guides

Authored by Umbrella

- Complete 2024 Guide to Amazon Bedrock: AWS Bedrock 101

- AWS Bedrock Pricing: Your 2024 Guide to Amazon Bedrock Costs

- AWS Bedrock vs Azure OpenAI – Which Platform Is Best For You

Related product offering: Intelligent Cost Optimization Platform

Offered by Umbrella

Related technology updates:

Multicloud

Related guides

Authored by Umbrella

- What Is Multicloud? Benefits, Use Cases, Challenges and Solutions

- Multicloud Strategy: Pros, Cons, and Tips for Success

- Multicloud Management: How It Works & 5 Critical Best Practices

Related product offering: Intelligent Cost Optimization Platform

Offered by Umbrella

- Multicloud Cost Visibility for FinOps

- Boost your cloud efficiency with automated waste detection

- Detect and resolve cloud cost anomalies in real-time

AWS Autoscaling

Related guides

Authored by Spot.io

- AWS Auto Scaling: Scaling EC2, ECS, RDS, and more

- EC2 Autoscaling: The Basics, Getting Started & 4 Best Practices

- Understanding EC2 Auto Scaling Groups

Related product offering: Spot Elastigroup

Offered by Spot.io

Related technology updates:

AWS EC2 Pricing

Related guides

Authored by Spot.io

- AWS EC2 Pricing: The Ultimate Guide

- The Complete Guide to EC2 Instance Pricing

- AWS Reserved Instances: Ultimate Guide [2024]

Related product offering: Spot Elastigroup

Related technology updates:

Cloud Cost

Related guides

Authored by Spot.io

- Cloud Cost: 4 Cost Models and 6 Cost Management Strategies

- 5 Cloud Pricing Factors and Examples from Top Cloud Providers

- 9 Free Cloud Cost Management Tools

Related product offering: Spot Eco

Offered by Spot.io

Cloud Optimization

Related guides

Authored by Spot.io

- Cloud Optimization: The 4 Things You Must Optimize

- What Is Cloud Automation? Use Cases and Best Practices

- Cloud Infrastructure: 4 Key Components and Deployment Models

Related product offering: NetApp Spot

Related technology updates:

CloudOps

Related guides

Authored by Spot.io

Related product offering: NetApp Spot

Related technology updates:

EC2 Instances

Related guides

Authored by Spot.io

- AWS EC2 Spot Instances Workload Automation

- Which EC2 Instance Type is Right for You?

- How the EC2 API Works and a Quick Tutorial to Get Started

Related product offering: Spot Elastigroup

Offered by Spot.io

Related technology update: [Announcement] Cost Intelligence and Billing Launch

Google Cloud Pricing

Related guides

Authored by Spot.io

Related product offering: Spot Elastigroup

Spot Instances

Related guides

Authored by Spot.io

- Ultimate Guide to Spot Instances on AWS, Azure & Google Cloud

- Spot Instances vs. On-Demand Instances: Pros and Cons

- Spot Instances vs. Reserved Instances: How to Choose

Related product offering: Spot Elastigroup

Multi Tenant Architecture

Related guides

Authored by Frontegg

- Multi-Tenant Architecture: How It Works, Pros, and Cons

- Multi-Tenancy in Cloud Computing: Basics & 5 Best Practices

- Multi-Tenant vs. Single-Tenant: What Is the Difference?

Additional IaaS Resources

- What Is Cloud Orchestration?

- 4 AWS File Systems Compared

- ECS Vs. Plain Kubernetes: 5 Key Differences and How to Choose

- Benefits of Cloud Migration: Do They Outweigh the Cost?

- Cloud Orchestration and its Impact on DevOps Teams

- AWS Fargate pricing: how to optimize billing and save costs

- The Complete Guide to EC2 Instance Pricing

- Azure Kubernetes: The Basics and a Quick Tutorial

- Moving HPC To The Cloud: A Guide For 2020

- Virtualization in Cloud Computing: Behind the Scenes

- HIPAA Compliance in the Cloud: A Quick Start Guide

- Azure Encryption Explained

- Understanding Azure Time Series Insights

- 3 Azure Migration Services and How They Cut Migration Costs

- What Is a Cloud Operations Engineer?

- How to Become a Cloud Operations Engineer

- Cloud Capacity Planning: A Practical Guide

Which Service to Choose?

Whether choosing an infrastructure or platform as a service model, cloud servers are practical solutions in today’s world of constantly evolving technology. Since 1994, Atlantic.Net has been providing businesses with the best hosting solutions like our super-fast VPS Hosting. To learn more about our cloud server hosting solutions, including HIPAA compliant hosting, give us a call today at 800-521-5881.